Diffusers

Diffusers is a Text to Image generation plugin using the Diffusers library. This supports Stable Diffusion and all it's compatible models allowing you to generate images from prompts.

Usage

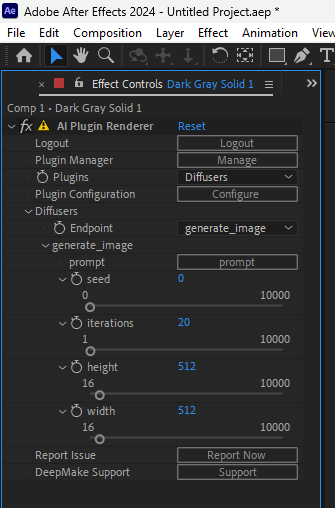

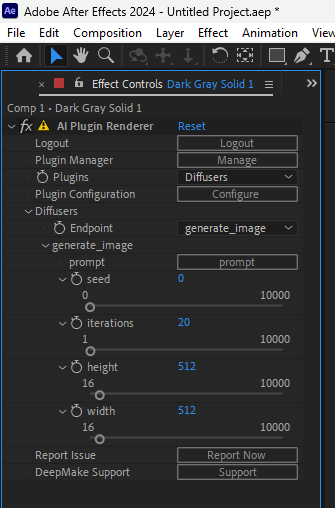

To use Diffusers, select the Diffusers plugin from the plugin selector.

There are two endpoints available for Diffusers, generate_image and refine_image

Generate_Image

To generate an image from a prompt you can use the generate_image endpoint

generate_image will make an entirely new image replacing whatever else is in the layer, if you want to modify an image use the refine_image instead.

This takes several parameters

Prompt

Enter a prompt by clicking the "Prompt" button which will open a separate window where you can enter a prompt

This prompt is a text description of the image that you want to generate.

For advice on creating the best prompt, see the #prompt_help channel in our

Seed

Seed is a way to get another variant image without changing the prompt. Each seed will generate a new copy of the image. If you like the prompt but don't quite like the resulting image then try a new seed to get another image.

Iterations

Iterations is a count of how many cycles of the Diffusion process you want to go through. Low iterations can cause artifacts but will generate faster. There is a point of diminishing returns with higher iterations but sometimes it's worthwhile to increase the number of iterations to remove artifacts.

Height

Height is the height of the image generated by the Stable Diffusion tool. It's important to note that changing the size of the image casues changes to the image generated, if you want a higher quality version of the same image look at either upscaling the image or resizing the output and sending it through the refine_image endpoint to refine the results.

Width

Width is the width of the generated image, see Height for information on the size notes.

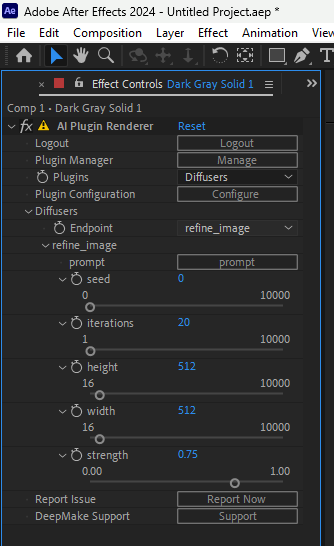

Refine_Image

refine_image is used to refine or modify an image as a complete image. This can be useful for example to change the style or coloring, or to use Stable Diffusion as a sort of "quality increase" if you don't care about the consistency of the output. It can be used along with masking or carefull selection to remove or change items in the image too.

The options are mostly the same as generate_image with the exception of Strength

An important note is that unlike generate_image, the refine_image endpoint will take the current layer and modify it, creating a new image that takes from both the input and the prompt.

Prompt

Enter a prompt by clicking the "Prompt" button which will open a separate window where you can enter a prompt

This prompt is a text description of the image that you want to generate.

For advice on creating the best prompt, see the #prompt_help channel in our

Seed

Seed is a way to get another variant image without changing the prompt. Each seed will generate a new copy of the image. If you like the prompt but don't quite like the resulting image then try a new seed to get another image.

Iterations

Iterations is a count of how many cycles of the Diffusion process you want to go through. Low iterations can cause artifacts but will generate faster. There is a point of diminishing returns with higher iterations but sometimes it's worthwhile to increase the number of iterations to remove artifacts.

Height

Height is the height of the image generated by the Stable Diffusion tool. It's important to note that changing the size of the image casues changes to the image generated, if you want a higher quality version of the same image look at either upscaling the image or resizing the output and sending it through the refine_image endpoint to refine the results.

Width

Width is the width of the generated image, see Height for information on the size notes.

Strength

Strength is how much the original image is allowed to come through into the output. If set to 1.0 the original image will be returned completely intact and unchanged. If set to 0.0 refine_image will work exactly the same as generate_image creating an entirely new image with no connection to the input image. Try using small numbers in the .1-.25 to make small changes such as stylistic changes to an image. Using large numbers of greater than .5 will lead to images that have only the lightest connection to the original image.

Using other models

Stable Diffusion has a lot of models -- both official and unofficial -- available for use.

We support using any major models from the original Stable Diffusion all the way up to SDXL, Turbo, and Cascade, as well as custom models that are supported by Diffusers.

Changing the model

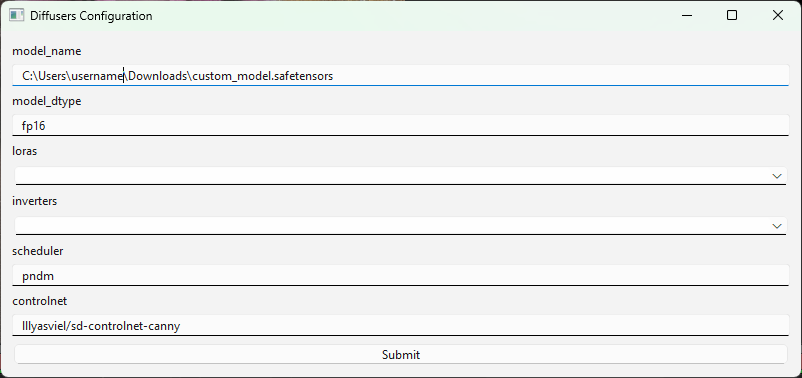

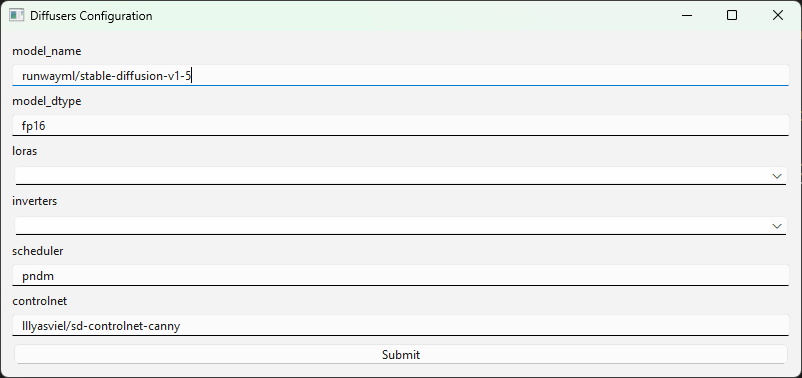

First click on the "Configure" button under the Plugin drop-down while you have Diffusers selected.

This will open up a configuration window with various paramaters you can change. For now, we'll only look at model_name

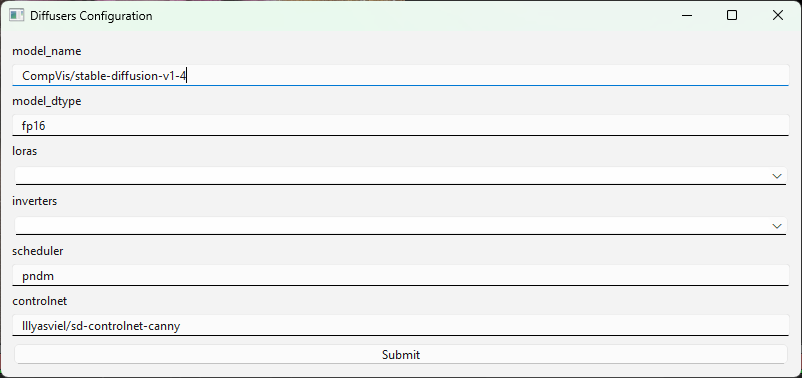

To use a model from hugging face, just put the user/model name from the url into the model_name field.

For example, to use Stable Diffusion 1.4 from https://huggingface.co/CompVis/stable-diffusion-v1-4 just put CompVis/stable-diffusion-v1-4 in the model_name field.

Here is a list of the official models:

- CompVis/stable-diffusion-v1-1

- CompVis/stable-diffusion-v1-2

- CompVis/stable-diffusion-v1-3

- CompVis/stable-diffusion-v1-4

- runwayml/stable-diffusion-v1-5 (The default model)

- stabilityai/stable-diffusion-2

- stabilityai/stable-diffusion-2-1

- stabilityai/stable-diffusion-xl-base-1.0

- stabilityai/sdxl-turbo ( WARNING: Has a non-commercial license )

- stabilityai/stable-cascade ( WARNING: Has a non-commercial license )

You may also download any custom Stable Diffusion compatible model that you wish. We support both .pth and .safetensors versions of models. To use the model, simply download the model to your local system, then copy the path to the model into the model_name.